The microchip that spawned the personal computer revolution is 25 years old. And the revolution ain’t over yet.

By Wilson da Silva

THE WORLD CHANGED forever on November 15, 1971. And hardly anyone noticed.

Watergate was still seven months away. Britain had just voted to join the European Union, India and Pakistan were again at war, and Columbo debuted on television. It was the Year of the Pig, Mao Zedung was still in power, and the biggest news of that day was the re-admission of the China to the United Nations.

Yet on that same morning 25 years ago, a small start-up company in California known as Intel, barely three years old, put out a press release that signalled the dawn of the Digital Age. “Announcing a new era in integrated electronics” it said breathlessly, as press releases often do. And for once, it was an understatement.

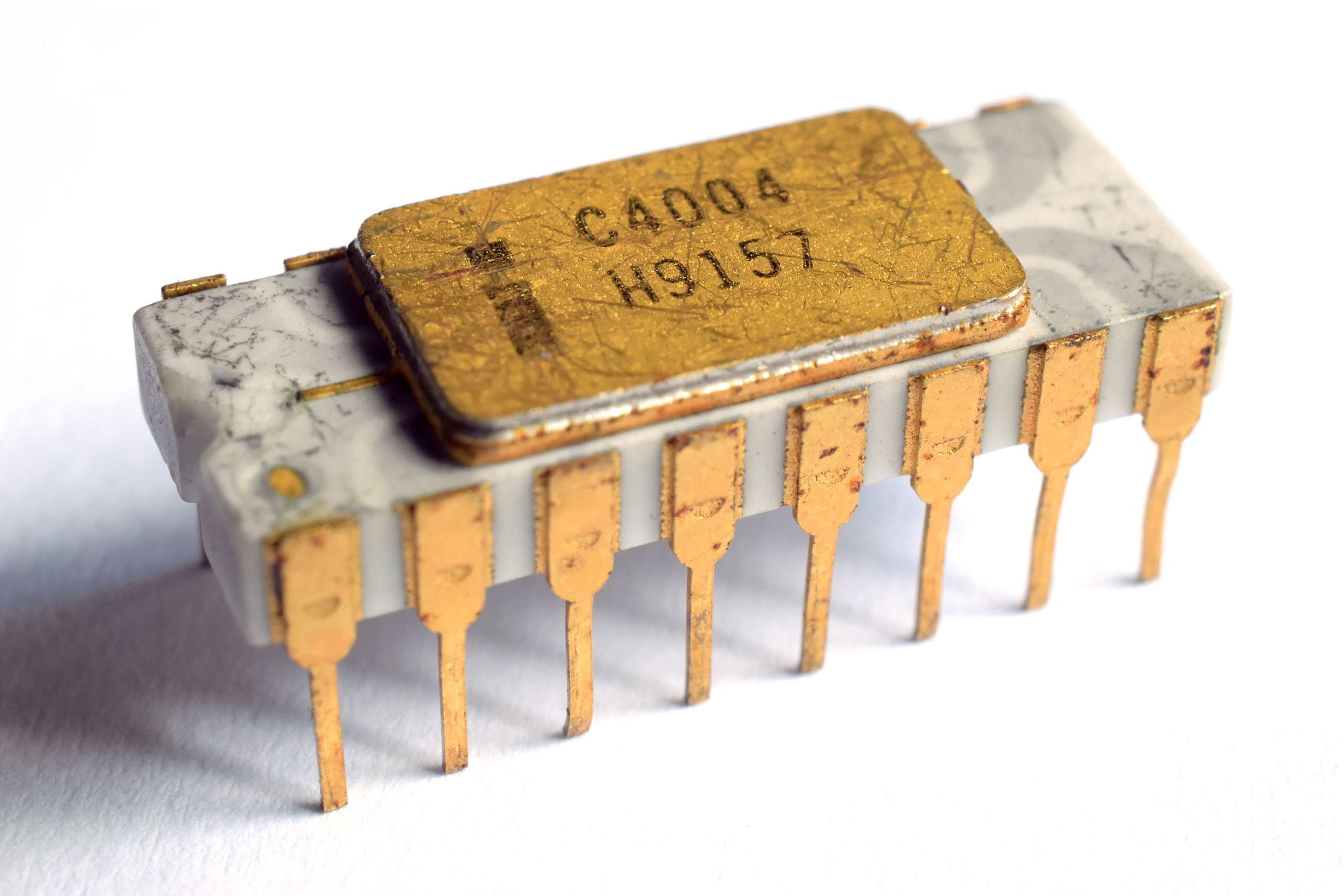

The product launched was the Intel 4004, the first general-purpose “microprocessor” and the world’s first computer chip. It was an innocuous sliver of silicon in a dark metal casing and fat metal electrodes for legs, looking all the world like a headless electronic cockroach. With 2,300 transistors, it could process what must have seemed like an astounding 60,000 instructions a second. In today’s tech-talk, it was a 4-bit microprocessor with an operating speed of 100,000 cycles, or 0.1 megahertz (MHz). It cost US$200 or, in today’s terms, US$3,330 per MIPS (million instructions per second).

That modest chip represented a revolution so powerful it would sweep the world and eventually re-shape it. In 1971, offices were filled with paper: order books and invoice folders, carbon copies and suspender files, forms in triplicate, memos written by hand, and desks populated with rubber stamps and typewriter ribbons. Armies of busy clerks swarmed around the paper mountains: sorting, marking, stamping, filing. Today, the office is a more contemplative place, broken only by the gentle hum of computers and the click-clack of keyboards; the army of clerks has been replaced by a platoon of skilled information workers or – as U.S. Labour Secretary, economist and author Robert Reich describes modern workers – “symbolic analysts”.

Today, that little-known company in Santa Clara, California, is the world’s largest manufacturer of microprocessors, with 42,000 employees and annual revenue of US$16.2 billion. Intel’s latest chips, the Pentium and Pentium Pro, all trace their lineage back to the 4004. The high-end Pentium Pro chip has 5.5 million transistors, a clock speed of up 200 MHz, and can process 381 million instructions per second. Within the span of a human generation, the clock speed and the number of transistors that can fit on a microchip have soared more than 2,000 per cent.

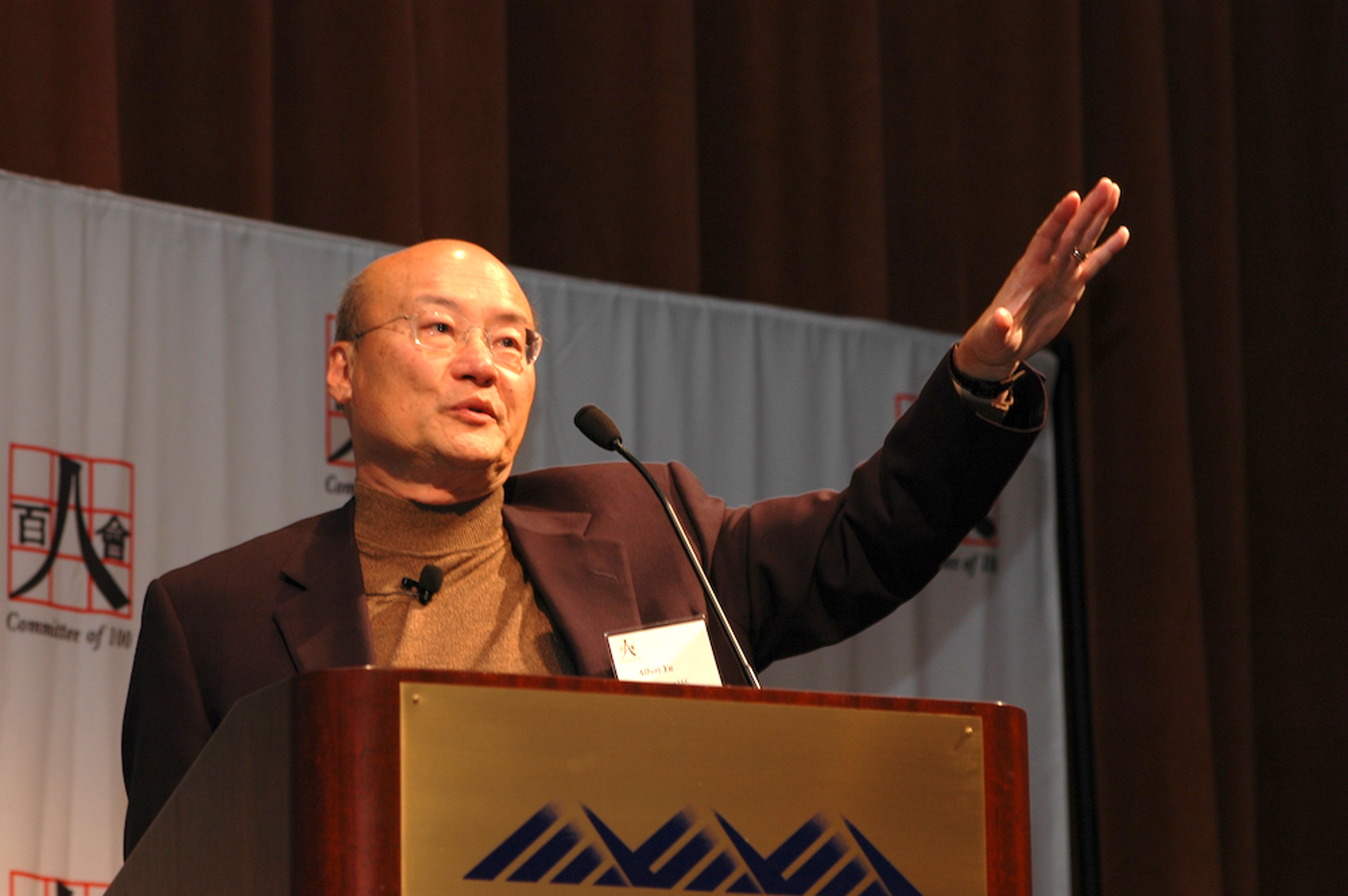

“None of us thought it was going to be this big,” Albert Yu, senior vice-president at Intel, told The South China Morning Post. “We thought it would be substantial, we thought it had potential. But we had no idea it would go this far.”

When the 4004 chip hit the market, International Business Machines Corp. was making big, heavy, room-crowding mainframe computers with spinning magnetic tapes and metal desks where operators flicked switches or fed perforated paper punch cards into slots. The arrival of the 4004 made hardly a wave: the reaction from industry to Intel’s innovation was largely “So what?”.

Some did take an interest: it became obvious that the complex mathematical calculations performed on slide rules, which had propelled men to the Moon in Apollo 11 only two years earlier, could be performed flawlessly and instantaneously using the silicon chip. Soon, the first programmable electronic calculators were born, as were electronic cash registers, traffic light controllers that could sense approaching cars, and digital scales. But few really understood the true power of the chip.

Intel had arrived at the 4004 chip by accident. At the time, logic controllers for machines had to be custom-made and task-specific. In 1969, Intel received an order from Japan’s Busicom to manufacture 12 chips for a new line of desktop, spool-paper electric calculators. Intel engineer Ted Hoff had an idea: rather than make 12 separate chips, maybe he could design a single general-purpose chip – a logic device that could retrieve the specifics of each application from a silicon memory. Nine months later, he and fellow engineer Federico Faggin created a working CPU, or central processing unit – the heart of today’s personal computers.

Both Hoff and Faggin quickly realised the chip’s potential: that single US$200 microprocessor could perform what only two decades before had taken 18,000 vacuum tubes and 85 cubic meters, weighing 30 tonnes, to achieve: perform the tasks of a general-purpose computer. Even in 1969, there were only 30,000 computers in the world, they were expensive and performed calculations cumbersomely.

Hoff tried to convince Intel to buy the chip back from the Japanese, who under the contract owned the intellectual rights. Intel founder Bob Noyce, who in 1959 at Fairchild Semiconductor had co-invented the printed-board integrated circuit (the basis of all solid-state electronics today), also saw its potential, as did fellow founder Gordon Moore. Others in the small company were not so sure: Intel was in the business of building silicon memory – CPUs might get in the way.

Finally, the four men won the doubters over, and in 1971 Intel offered to return Busicom’s US$60,000 investment in exchange for the rights to the chip. Busicom, struggling financially at the time, accepted the offer. The deal hardly made a ripple at the time, even at Intel. Few realised that it may well have been the deal of the century.

After the birth of the Intel 4004, not much happened at first. In the year that followed, more American astronauts walked on the Moon in Apollo 16, Polaroid launched its first instant colour camera, U.S. President Richard Nixon visited China and The Godfather hit in the cinemas. But that year also saw Intel launch the 8008 chip – an 8-bit microprocessor with 16,000 bytes, or 16 KB, of memory. It could process 300,000 instructions per second – a five-fold improvement on the year before.

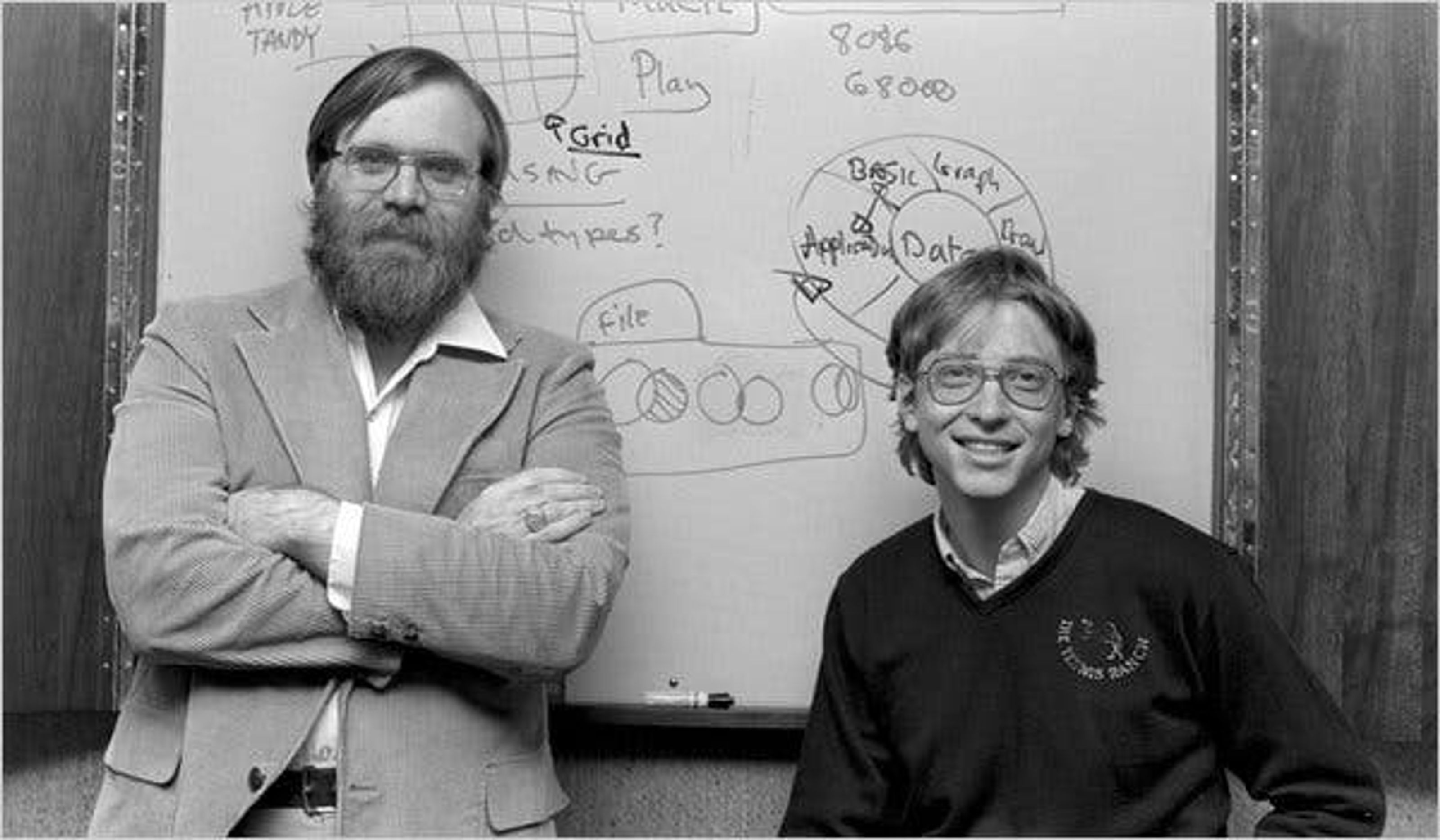

It was a confirmation of “Moore’s Law”: in 1965, a seemingly bold Gordon Moore, before founding Intel, stated that the number of transistors that could be crammed into a chip would probably double every 18 months – generating a huge increase in power and a rapid decrease in cost. That same year – 1972 – Atari was founded by Nolan Bushnell, and “Pong” – the black-and-white, tennis-like computer video game that was world’s first – was released commercially. Also that year, two young and ambitious computer afficionados, Paul Allen and Bill Gates, seized on the Intel 8008 and developed a traffic flow recording system, creating a company called Traf-O-Data.

By year’s end, things began to speed up; more and more people began to see the potential of the microprocessor. In 1973, Scelbi Computer Consulting launched the Scelbi-8H computer kit, a build-it-yourself computer based on the Intel 8008. It had 1KB of random-access memory (RAM) and sold for US$565. In France, engineers perfected the Micral, the first pre-assembled computer, also based on the Intel 8008 microprocessor.

The term “microcomputer” first appeared in print describing the Micral; although sold in the United States, it failed to take off. In April of 1973, Intel launched another chip: the Intel 8080, an 8-bit microprocessor with 6,000 transistors, a throughput of 3 million instructions-per-second and a seemingly awesome 64KB of memory. Radio Electronics magazine ran a feature article showing how to build a “microcomputer” using the chip, a design it dubbed the `Mark 8’.

By then, the field was starting to crowd a little more. The Chicago-based radio and communications company Motorola launched its own microprocessor, the 6800, an 8-bit chip with 4,000 transistors and a five-volt power supply. But it took two computer enthusiasts in Albuquerque to really set computing on fire: working under the grandiose name of Micro Instrumentation and Telemetry Systems (MITS), and saddled with debts of US$365,000, Ed Roberts and Forest Mims launched the Altair 8800, a ready-to-assemble computer based on the new Intel chip. Selling for a rock-bottom US$489, it made the cover of Popular Electronics in January 1975 – and set off an avalanche of orders.

After publication, MITS received 400 orders in one afternoon alone, and within three weeks had a US$250,000 backlog of orders. At a time when there were less than 40,000 computers in the world, the Altair – named after a planet in an episode of Star Trek – sold 2,000. It was the first machine to be called a “personal computer”, although it was a glorified square box with lights and toggle switches. If you wanted a keyboard, monitor or magnetic tape drive, you had to buy expansion cards separately.

Nevertheless, the Altair sparked much interest; Allen and Gates ditched development of Traf-O-Data and approached MITS, offering to write an operating system for the Altair. The duo called themselves “Micro-Soft”, a business name they did not formalise (dropping the hyphen) until the following year. The operating system they developed, written in a version of the BASIC computer language, was the first piece of PC software ever sold.

What followed was an explosion of development in the application of both Intel and Motorola chips. A rash of small start-ups appeared with new models, and just as often disappeared, their names now long forgotten: Kenback, IMSAI, Sol, Jupiter II, Southwest Technical. Meanwhile, at established companies like Xerox and Digital Equipment Corp., engineers broke new ground in personal computer design, but management – seeing no application for the machines – scuttled most of the projects. At Digital, work that began in 1972 on the “DEC Datacenter” – what may have well have been the first computer workstation – was halted when management said they could see no value or application for the product.

And they had a point; in 1975, despite all the market activity, personal computers were still the preserve of hobbyists. That all changed in December 1976, when Steve Wozniak and Steve Jobs displayed to a Californian computer enthusiasts’ club a prototype of the Apple II. Their earlier kit model, the Apple I priced at US$666.66, had done roaring trade; enough for the duo to sell their cars and programmable calculators to form – on April Fool’s Day 1976 – Apple Computer. The next model, the ready-to-use Apple II – amazingly complete for the time with colour monitor, sound and graphics – was launched the following year.

By the end of 1977, the Commodore PET and the Tandy TRS-80 had also entered the fray. Apple developed a floppy disk drive, and then a PC printer. Word processing and games were the major applications. But it was not until 1979, with the launch of an accounting spreadsheet program called VisiCalc for the Apple II, that the world really took notice of personal computers. For the first time, everyone could see what computers might do: instant calculations. Figures altered on a budget spreadsheet, for example, would automatically flow on changes to other parts of the document and update them, cascading figures up or down.

By 1980, the personal computer industry was becoming too big to ignore. IBM, scared into the market, created a crack unit of engineers in August 1980 to develop its own personal computer, a project codenamed “Acorn”. With no time to develop a chip, Big Blue went to Intel, which set about configuring its new 8088 chip for IBM. With no time to develop an operating system, IBM also contracted a fledgling Seattle computer company, Microsoft, to write one, impressed by the young Bill Gates and his pioneering work with Paul Allen developing a system for the Altair 8080 years before.

In a piece of bravado that is now a part of computer industry mythology, Gates confidently detailed the idea of DOS to IBM, and agreed to licence it – before Microsoft even owned it. Known as QDOS (quick and dirty operating system), it actually belonged to Seattle Computer Products – but Gates did have a license to use it. After closing the IBM deal, Gates bought rights to the system from the troubled Seattle company and renamed it MS-DOS.

When the IBM PC was launched in August 1981, it single-handedly legitimised the personal computer as a serious tool. Relying on a 4.77 MHz Intel 8088 chip, and with only 64KB RAM memory and a single floppy drive, it went on sale for a pricey US$3000. And yet it sold like hotcakes. With the marketing muscle of IBM and the stamp of business approval it brought, both Intel and Microsoft were suddenly guaranteed a future.

But it had an knock-on effect too: suddenly, personal computers made by Apple, Commodore, Tandy and newcomers like Sinclair gained instant respect. Within a year, others were on the scene, or had crash programs to get in: Compaq, Texas Instruments, Epson, Atari, Amiga, Osborne, Olivetti, Hewlett-Packard, Toshiba, Zenith. Others muscled into the microchip manufacturing stakes, most notably Japan’s NEC Corp, now second-largest semiconductor maker, and Texas Instruments. Even Digital, its management now convinced, had entered the market in a big way.

By the end of 1981, an estimated 900,000 computers had been shipped worldwide, and Apple became the first PC company to reach US$1 billion in annual sales. Application programs like dBase and Lotus 1-2-3 flooded the market, and at the end of 1982, another 1.4 million personal computers were shipped. By January 1983, the revolution was so apparent that Time magazine named the personal computer “Man of the Year”.

“In the mid-1970s, someone came to me with an idea for what was basically the PC,” Intel chairman Gordon Moore recalls. “The idea was that we could outfit an 8080 processor with a keyboard and a monitor and sell it in the home market. I asked, `What’s it good for?’. And the only answer was that a housewife could keep her recipes on it. I personally didn’t see anything useful in it, so we never gave it another thought.”

No-one, it seems – not even the most avid enthusiasts – could foresee the widespread applicability and incredible popularity of the personal computer. Certainly none would have imagined that Intel’s first modest silicon chip would spawn, 25 years later, a tidal wave of more than 200 million personal computers that would populate offices and homes around the world. Nor predict that, at the close of the century, more PCs would be manufactured than cars and almost as many as televisions.

Along the way, many a promising company has been wrecked on the shoals by the unpredictable and fast shifting currents of the burgeoning computer industry. Even industry stalwarts have had to paddle furiously on occasion: in 1993, IBM almost gagged on a year-end loss of US$4.96 billion, the highest annual loss for any U.S. company in history; Apple is still crawling out of what only a year ago looked like a yawning abyss of extinction. Intel and Motorola too have had their anxious moments with product lines that failed to catch fire.

Some years ago, Intel chief executive Andrew Grove coined a phrase that has become the unofficial motto of the personal computer industry: “Only the paranoid survive.” Next week (Eds: Nov 18), Grove will give the keynote address at Comdex in Las Vagas, the annual meeting that brings together 210,000 information technology professionals from around the world. His theme? “A Revolution in Progress”.

And no wonder. Despite the technological rollercoaster ride of the past quarter century, it seems there’s a lot more ahead. “Moore’s Law looks like it’s going to continue,” Intel’s senior vice-president, Albert Yu, told a recent press briefing in Hong Kong. “I certainly don’t see any physical limits ... looking out to the next 10 years or so.

“Today, there’s close to six million transistors on a Pentium Pro chip. If you draw a straight line, you can imagine anywhere from 300 to 400 million transistors 10 years out,” said Yu, a 20-year veteran at Intel. “Just imagine the kind of power that will be available by that time.”